Standard fabrication technologies are surely an essential building block for the commercial exploitation of photonics, but they still have to face an unavoidable reality hampering their success: uncertainty. A brief walk around in the world of photonic circuit design, looking for novel approaches and seeking for stability among the imperfections.

Photonics rapidly emerged in the last few years as a mature and promising technology, with an evolution from a pure research topic to a market-ready player, aiming at achieving large production volumes and small fabrication costs. While this large paradigm shift has seen Europe as a forerunner, with a large number of EU funded projects, groups and companies focusing on this topic since mid-2000s, an intense activity is occurring also oversea, in particular with the large and well-known AIM Photonics. The entire approach revolves around a key concept, quite obvious in the electronics world: keep the fabrication technologies as stable, reliable and efficient as possible and invest your effort in designing innovative circuits on top of this technologies. This is most certainty the only way to see a commercial application of photonic circuits including more than few basic building blocks. Process design kits, circuit simulators, generic foundry approaches, and multiproject wafer runs are all consequences of this concept, effective tools to implement it in the real world. These tools are changing the way photonic circuit are conceived and finally opening up new evolution also from the design perspective. Stochastic analysis is surely among them.

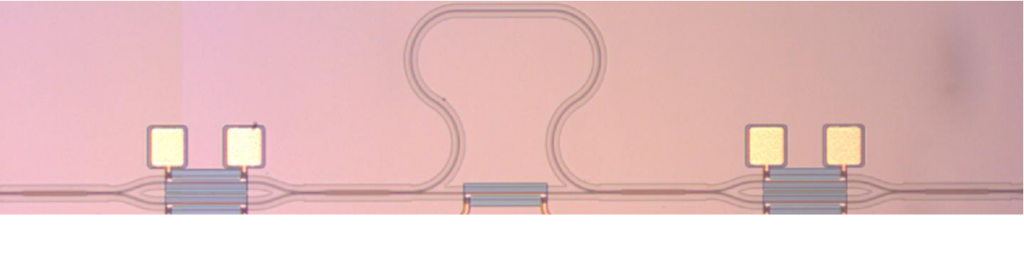

While standard fabrication technologies are an essential condition for the commercial exploitation of photonics, they still have to face an unavoidable reality of uncertainties. Each fabrication run is subject to an infinite number of possible variations (waveguide geometry deviation, gap opening issues, material composition fluctuations, and surface roughness) that may eventually cause a fabrication yield too low to be economically sustainable. This is why statistical data and uncertainty quantification techniques are becoming fundamental instruments also in photonics to efficiently achieve high-quality designs (high yield, smaller performance variability), as already happened for electronics or microwave circuits.

Monte Carlo is the approach commonly exploited to evaluate the impact of fabrication uncertainties on the functionality of the designed circuits. Although effective and virtually implemented in every design tool, it suffers from a slow convergence rate and requires long computation time and often large computational resources. For this reason, stochastic spectral methods have recently been regarded as a promising alternative for statistical analysis due to their fast convergence. The idea is to approximate the output quantity of interest (e.g. the transfer function, the power consumed by the circuit, the bandwidth of a filter) with a set of orthonormal polynomial basis functions, known as generalized polynomial chaos expansion. Once this surrogate model is known, it can be used in place of the original model (either circuit-based or electromagnetic model) to analyse the stochastic behaviour of the circuit in a very efficient way since it only requires to evaluate a polynomial instead of solving complex models thousands or hundred of thousands times. A very interesting application is the possibility to optimise the circuit response in few minutes for example to increase the robustness of a parameter (e.g. the bandwidth) against fabrication uncertainties.

There is clearly also the other side of the coin. Computing the surrogate model is not an easy job, in particular when many uncertain parameters are involved, and it actually covers an entire research area in applied mathematics. There are basically two classes of method to compute the coefficients of the basis functions, and each class has its own pros and cons. For intrusive methods (i.e. nonsampling methods) such as stochastic Galerkin and stochastic testing, the computation cost is sometimes lower but it requires modifying the internal code of an existing deterministic solver. Conversely, non-intrusive methods (i.e. sample-based methods), including stochastic collocation and least-squares regression techniques, use the deterministic solvers as a black box, which is often more convenient in practice.

Some of these techniques are well know and established while other are open research problems and photonics is quickly evolving both acquiring this know-how and stimulating new developments. Eventually, whatever is the approach and the technique that best suits every specific case, the availability of statistical data and efficient stochastic tools are going to be key instruments in the close future for photonic designs on advanced technologies.

5039184 {:A5XVT4RD},{:BYUUVAE4},{:NRRCMI35},{:INHW2TNE} 1 chicago-author-date 50 date 55 https://danielemelati.com/wp-content/plugins/zotpress/